This work is licensed under a Creative Commons Attribution 4.0 International License.

Newly launched web apps tend not to have a special backstory to generate natural excitement, or piles of spare cash to splurge on marketing. Instead they must rely on natural search engine traffic to deliver their important first customers.

Search engine optimisation (SEO) is the practice of actively influencing where, when and how your app appears in search engine rankings, to maximise the influx of potential customers. For the best results it requires both short-term tactical tweaks and long-term strategic planning.

A diminishing minority still disparages the need for SEO with the claim that you should just create good content. While high quality content is undeniably a crucial part of the puzzle, it doesn’t take account of many other factors. For example, how do you know whether to write that your app identifies food alternatives or recipe substitutions? Or, even more subtly, is it a color designer or a colour designer? Such delicate choosing between words is just one of many informed decisions we must make to increase our chances of attracting the maximum number of customers from search engines.

SEO is perhaps even more vital for a web app than a standard website. A web app typically has fewer public pages with which to harness attention and must maximise the exposure of each. Apps also tend to have more complicated interfaces that can trip up search engine crawlers and keep your content out of their indexes, unless the issues are considered and addressed.

A search engine has three essential functions: to crawl, index and rank.

A crawler (or spider) retrieves a webpage, scours it for links, downloads the additional pages from which it identifies new links, ad infinitum. As a result, a newly created website is found by a crawler when it follows a link from an existing website or social media stream, like Twitter. Thanks to the speed of modern crawlers, new websites are rapidly identified and crawled; most old-fashioned ‘submit your site’ forms are unnecessary and irrelevant.

Crawlers can be given simple instructions for a website through a robots.txt1 file in the root of a website, or <meta> tags in the markup2. These can tell crawlers of one or more search engines to ignore sensitive pages or sections of the website. Conversely, an XML sitemaps3 file can be created (and identified to the crawler through the robots.txt file) that lists explicit URLs to crawl. A sitemaps file can list up to 50,000 URLs and can make crawling more efficient for larger websites, though it does not replace the standard crawl and does not guarantee that the crawler will retrieve all of the specified pages.

Once a crawler has retrieved a page, the search engine can index it. The indexing process extracts the important keywords, phrases and data from the code that makes up the page, to increase the efficiency of searching billions of pages for user queries.

The indexing process is made less effective if it encounters invalid HTML and indecipherable multimedia files, and it is enhanced when it detects semantic HTML and microformats that hint at pertinent content on the page.

Search engine indexes are increasingly sophisticated. Rather than extracting simple lists of keywords and their frequency or density of use, search engines attempt to identify which keywords are important for a page using a variety of factors. This includes their position in the page structure, their proximity to one another, and topic modelling: knight and pawn might hint at the topic of chess, knight and arthur at the topics of folklore and literature.

With the index built, the search engine can identify matching pages for a user search query. It must then rank the results of matched pages so that the most relevant are listed first.

Ranking algorithms use a variety of signals to measure the relevance of a page to a query; Google uses over two hundred signals4. The exact signals and their relative weighting are confidential, but many are publicly known and these are often the targets for search engine optimisation.

They include the location of the word in the page title, the response speed of the page and the authority associated with the domain. The most renowned metric for authority is Google’s PageRank measurement, which factors in the number and diversity of incoming links to the page, and how many degrees of separation the page is from known authoritative sources.

Most recently, Google has added user experience and usage metrics as ranking signals5, so that professionally designed, user-friendly websites that attract and retain visitors are given a boost in the search results.

As a consequence of accurate ranking algorithms, people rarely need to click through multiple search engine results pages (SERPs) to find an appropriate result for their query. Studies6 7 suggest that the first result attracts 35–50% of clicks, the second result 12–22% of clicks and the third result 10–12% of clicks. Using the most conservative figures, the top three organic search results draw 60% of the clicks.

This makes SEO a winner-takes-almost-all game: you’re better off ranking first for a phrase that 10,000 people a month search for, than ranking ninth for a phrase with 200,000 monthly queries.

But why doesn’t everyone click on the first result? It all depends on how the displayed result matches the user’s needs and expectations, which may or may not be fully expressed through their query. For example, a typical person looking for a hotel in Vancouver, Canada might generically search for Vancouver hotel.

Top three Google results for Vancouver hotel search

While all top three Google results are highly relevant, the first two contain the words luxury and finest, which may dissuade value-conscious searchers from clicking. Additionally, the third result mentions British Columbia in the displayed URL, which reassures the searcher that the page is relevant to their query and does not concern the other Vancouver in Washington State.

With this in mind, it is important in SEO to not only focus on ranking for a keyword or phrase, but also to consider how the result will appear in the SERPs and how they match the user’s expectations of relevance, trust and brand.

*I use the term keyword to refer to one or more words, including phrases

It’s likely that you have a newly registered domain, a new website and few incoming links, all negative SEO factors that put you at a massive disadvantage against your competition. It’s critical that you identify and use effective keywords* to stand a chance of ranking well in SERPs and attract reasonable traffic, rather than opt for generic phrases because they have the widest appeal.

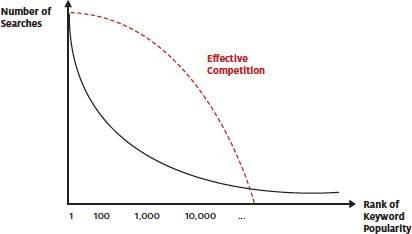

The long tail of keyword searches

If we plot the rank of search terms from the most searched for to the least searched for, they exhibit a typical long tail8. The most popular terms are searched for considerably more frequently than other terms, but the majority of searches reside in the never-ending tail of the distribution.

*Paid adverts attract around 12% of the clicks on a results page

Most websites and web apps scrap over the same popular keywords. As you move down the distribution, Google will almost always have relevant pages to display as results, but most will not be optimised for a specific keyword, effectively reducing the competition to zero. As a bonus, long tail keywords tend to have fewer pay per click adverts, removing even more of your competition on the results page*.

Keyword selection should take account of five factors:

The most popular keywords are unattainable for a new web app, but we still want to choose long tail keywords that are as active as possible: there’s no point targeting a keyword that few people search for. Remember that a first place rank in the SERPs only attracts 35–50% of the clicks, so a keyword with 1,500 searches per month will generate at most 750 clicks.

Ideally you want the long-term trend of searches for the keyword to be increasing or at least relatively stable. Don’t choose keywords that are going out of fashion, or represent recently introduced terminology that isn’t fully established. It can take months or years for some keywords to rank, so choose for the long term.

Unless you are launching from an existing authoritative website, you will find it difficult to compete against moderately competitive terms. Select keywords with the least amount of competition possible.

Only target keywords that are highly relevant to your web app. Both free sex and mp3 download have a high search volume but, unless they’re relevant to your web app, there’s no point targeting them.

We can infer the main objective of the searcher from many keywords. In three broad categories, these objectives are:

Transactional keywords have the highest commercial intent and offer the most value as an SEO target, followed by informational keywords. Navigational keywords often exhibit commercial intent (searching for American Airlines or Hilton San Francisco implies a purchasing decision) but these are impossible to compete against effectively.

As a rule of thumb, the longer and more specific a search query, the higher commercial intent it has. An easy way to quantify commercial intent is to create an AdWord with your potential keywords and measure which elicit the highest click-through rates. Note that you want to measure the number of people who clicked and compare it to the number of people who searched for each term, not just the absolute number of clicks for each term, which will only tell you which term is searched for most frequently.

Percentage intent = (number of people who clicked ÷ number of people who searched) × 100

Now that we know what we need from a keyword, it’s time to get down to some research. You should expect to spend at least one day on this, and it helps if you use a spreadsheet to record and analyse your research data.

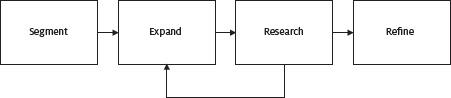

The keyword research process

Identify the main topics for your app. These can be gleaned from the app’s main functions and benefits, often only one or two at MVP stage (project management or online collaboration, for example), and any significant, generic needs identified in your personas, such as group communication, or organising a small team. Group the topics by primary user needs; think of each group as a potential landing page on your site that addresses a particular user case.

| Organisation | Collaboration |

|---|---|

| Project Management | Online Collaboration |

| Organise small team | Group communication |

It’s time to get creative with a little brainstorming. Expand your lists with as many related, relevant terms as possible. Don’t worry about competition, volume, trends or intent at this stage.

Check out competitor web apps for phrases and features, create permutations with plurals and synonyms (app, apps, tool, software, application, utility), and use tools like the Google Keyword Tool11 and Google Sets12 for inspiration.

Researching keyword ideas with the Google Keyword Tool

By now you should have dozens or even hundreds of potential keywords to research. Next, we need to remove any keywords that have negligible search volume.

What is considered as negligible search volume will vary from app to app, depending on its niche and price. Some specific, expensive apps may survive on a small amount of high quality traffic, but most will require modest traffic. Speaking optimistically, if you rank first for a keyword and convert 1% of traffic into paid customers, you need the keyword to be searched for 200 times to produce one customer. Given this, I often use 800 to 1,000 searches per month as the cut-off for negligible search volume, which should result in four or five new customers per month, per keyword.

To determine the search volume, paste your list of keywords into the Google Keywords Tool, check the Only show ideas closely related to my search terms box, and choose “Phrase” as the Match Type in the left column to ensure that the results you get are for the words in the specified order only.

Researching keyword competition and search volume with the Google Keyword Tool

Download the data into a spreadsheet so that you can add to it later. Any keywords from your list that don’t exceed the minimum value in the Global Monthly Searches column can be filtered out.

Next you need to assess the competition: how difficult will it be to rank for each keyword? The quickest, least accurate way to do this is to use the Competition column in the Google Keyword Tool results. This measures the competition for AdWords rather than organic search listings, but the two tend to correlate.

A better solution is to examine the authority and optimisation of the current top organic results for each keyword. Plenty of commercial software will help you to research this efficiently, like Market Samurai13 or the SEOmoz Keyword Difficulty Tool14, but the most cost-effective method for a cash-strapped start-up is to use a free browser plug-in like SEOQuake for Firefox15.

SEOQuake information in a Google results page for online whiteboard

Install the plug-in and search for one of your potential keywords on Google. Check the titles of the top three results: if a result has the exact search phrase in the title and it’s close to the start of the title, it suggests that the page has been optimised and provides decent competition (this is the case with all top three results in the image above). If you want to be extra cautious, visit each page and check for multiple on-page use of the keyword – a telltale sign of an optimised page.

The SEOQuake plug-in displays a summary of additional SEO information beneath each result. Check the PageRank (PR in the image above), the number of links to the page (L) and the number of links to the domain (LD). If you manage to market your web app effectively and attract diverse authoritative backlinks, you may be able to outrank existing pages with a PageRank of 4 or less and with hundreds of backlinks. A PageRank 5+ with thousands of backlinks should be regarded as high competition, especially in the short term.

Use all of this information to estimate the competitiveness of the keyword. In the example above, I would expect – with some effort – to have a chance of ranking third, but the top spot would be difficult. In this case, where a top three spot is possible but the top spot is unlikely, I’d rate it as medium difficulty. If the top spot seems feasible, rate the keyword as low competition, and if all three top results are established and optimised then flag it as high competition.

Discard high competition keywords and iteratively expand and research any low competition keywords that you haven’t fully explored.

By this point you will have keywords in your list that yield worthwhile traffic and are feasible for you to rank in the top three results. Now you need to choose the best keywords from the bunch.

Recheck them for relevance: if you’re not sure that the keyword really matches your app, remove it. Next, eliminate similar entries. If web collaboration tool and web collaboration tools have similar competition but the plural version has higher volume, keep that. Similarly, if one has lower competition but still has reasonable traffic, retain that version. We will eventually use natural variations of each keyword anyway but, for now, we want to focus on recording specific target keywords.

Open up the Google Keywords Tool with your prospective keywords and display the Local Search Trends column.

Researching keyword search trends

You may spot an occasional keyword that exhibits one or two large peaks in the trend, typically caused by a related item in the news or a product launch, as team collaboration does above. The number of searches is the mean average for the twelve months shown in the trend, so be aware that a couple of rare large peaks can falsely skew the total. If the search volume is close to your minimum desired value but the trend appears skewed by irregular peaks, remove the keyword.

Assign each remaining keyword a relative rating of commercial intent: low, medium or high. If someone could use the keyword to search for general information on a topic (web collaboration), mark it low intent. If the keyword suggests that the person is actively researching a solution (online collaboration tools), mark it medium; and if it hints at an immediate need (buy collaboration tool) score it as high intent.

Choosing target keywords by volume, competition, trend and intent

Order your list of keywords by search volume; you should have a separate list for each of the segments you identified in step one. Your prime targets in each segment will be the three to five keywords with the highest volume that offer the lowest competition and highest intent, relative to others in the list.

Finally, consider how you might normalise separate keywords into a single sensible phrase. From the list above, we can combine shared whiteboard with whiteboard online to form the single phrase shared whiteboard online. Super-combo breaker!

Keep your list to hand; you’ll need it for the next task.

Webpage optimisations are notionally designed for search engine crawlers but, happily, many improve user experience too. With some restraint, SEO updates can enhance website navigation and ensure that content expectations are visibly met when the user clicks through from a search results page.

I briefly mentioned authority or PageRank as a significant factor earlier, earned through links from external websites: the more trustworthy the linking website, the more authority is bestowed through a link. Authority flows similarly through internal links on your website:

The flow of authority between pages on a website

In the diagram above, A represents your homepage. Most news outlets, blogs and directories will link to your homepage, making it the most authoritative page on your website.

The homepage will contain menus and other internal links, in this example to pages B, C and D. The authority assigned to the homepage, minus a damping factor, is divided between the subpages and cascaded down. This flow of authority continues from each page to linked subpages, becoming weaker with every link.

Like the homepage, a subpage gains additional authority if it attracts external links. In the diagram, page N is a blog post that has gone viral, drawing attention and links from important blogs. Page N is thus assigned more authority than its siblings L and M, and will be more likely to rank in a SERP (all things being equal). It also possesses more authority to pass on to pages that it links to: in this example, to page K.

There are several steps we can take to make the most of the movement of authority from one page to another.

Every page on your website should have a single URL, so that links are focused on a single location. For example, never link to your homepage as both http://app.com/ and http://app.com/index.html. Always use the root URL, or some blogs may link to one version and some blogs to the other. If this happens, the incoming authority will be divided between two URLs rather than building strong authority at a single URL.

Similarly, if you own multiple top level domains (TLDs) for your web app (like app.com and app.net) decide on the primary TLD and use a 301 redirect to forward all requests from the secondary domain to the primary domain. Do not mirror the website at each TLD, which splits the incoming authority.

Every page on your marketing website should serve a purpose. Each link on a page dilutes the amount of authority that flows to the other links, so don’t create a page unless it serves a user or business goal. It was once thought that you could block the flow of authority through a rel="nofollow" attribute on an individual link, but it is now unclear16 whether this PageRank sculpting technique actually does preserve authority for the remaining links on the page. In case it does, consider adding the rel="nofollow" attribute to links to pages that don’t need to rank in SERPs, such as links to the login page.

*This problem is called keyword cannibalisation

Every page should focus on a key theme or purpose. In addition to the standard web app marketing pages (benefits, features tour, pricing, sign up, and so on), you should include landing pages for each of the primary keyword segments you identified. This allows you to focus a set of specific keywords on each page rather than duplicating target SEO keywords across multiple pages. Without focused pages, you may inadvertently optimise multiple pages for the same keywords*, which has a negative impact if a search engine can’t easily calculate which of your pages to rank more highly for a specific keyword.

Whereas many websites have thousands of pages, a web app tends to have a small marketing website where all of the relatively few pages are important. Ensure that your main pages receive plenty of internal authority by linking to them from every page, either in the main menu (benefits, tour, pricing) or a sidebar or footer (for other segment and needs-based landing pages).

Your homepage will have the most authority flowing to it; redistribute it with carefully chosen links. Similarly, link to the most important or underperforming pages (in SEO terms) from viral content and blog posts. You can even be a little sneaky and strategically insert links into a blog post after it becomes popular.

I made a simplification earlier when I hinted that authority is split evenly between links on a page. This was once the case, when Google’s random surfer model assumed that a visitor was equally likely to follow any link on a page.

Google’s updated reasonable surfer model17 takes page structure and user experience into account, assigning more weight or authority to links that are more likely to be followed by a person reading the page. In practice, this means that not only do you have to think about which pages link to other pages, but also where those links appear on the page. Inline links in the first paragraph or two of content are weighted more heavily than links in sidebars or footers. If you link to an important marketing page from a viral blog content item, try to include the link as an inline link embedded towards the beginning of the article.

As a general rule you should try to use descriptive, keyword-optimised anchor text for your links, except when it might negatively affect the user experience. This is often a subtle tweak, like changing view the collaboration features to view the online collaboration features, when you are optimising the linked page for the keyword online collaboration.

The anchor text of an internal link doesn’t carry as much weight as the anchor text from an external link, but it’s still a worthwhile optimisation to make. However, overly optimised anchor text, where dozens of links to a page contain exactly the same keywords, can lead to penalties, so use variations on larger websites.

One final note on links: only link to websites that you trust. Search engines have discovered that spam websites tend to link to other spam websites, so be wary if someone offers to buy a link on your website or otherwise requests a link to a website that doesn’t meet your standards. Don’t link to crap. Put systems in place to prevent spam links in user comments and other user-generated content. Conversely, links to trustworthy websites in your topic area won’t damage your rankings and may even have a small positive effect.

Search engines can only accurately index the text on your page. You should include HTML transcripts for audio and video files, and alt attributes on images. You may even be legally required to add these types of text equivalents under disability discrimination laws in your country18. Images should be optimised with target keywords where appropriate (but not at the expense of accessibility), in both alt attribute and file name19.

Every page should contain unique substantive content. Be wary of creating multiple pages with few textual differences between them: search engines may not detect a difference and treat them as duplicates.

If you expose hundreds or thousands of public pages on your web app, such as user profile pages, make them as light as possible in file size. By maximising the content to code ratio, you may reduce the chance of them being flagged as duplicates. In addition, it will increase the download performance (one of the many positive ranking signals) and will enable search engines to index more of your pages in each crawl, as many crawlers limit their sessions to a maximum size of total downloaded content.

Page URLs on your marketing website should follow some simple rules:

This is the big one. For an element that people rarely notice when they visit a page, the <title> element carries a disproportionate amount of importance, both as a ranking signal and as an influence on click-throughs from SERPS.

Include target keywords as close to the start of the title as possible. As each page will have a focused purpose or theme, so must the page title. Choose two or three top priority target keywords related to the current page and form a natural sentence from them. Be careful not to merely list multiple keywords, which will make the title and, therefore, the result in the SERPs seem spam-like and untrustworthy, reducing click-throughs.

An appropriate page title optimised for multiple target keywords might look like the following:

Collaborative editing tools and shared whiteboard online | App Name (Keywords: collaborative editing, shared whiteboard, whiteboard online)

While it’s not usually the case for most new web apps, if potential customers in your market are aware of your brand name, include the name at the start of page titles:

App Name | Collaborative editing tools and shared whiteboard online

Google displays up to 70 characters on a results page before cutting off a title with an ellipsis. Longer titles aren’t penalised, but ensure that you include any important keywords in the first 65 or so characters, and consider that longer titles may not be read in full.

Be careful of keyword cannibalisation across multiple titles: don’t optimise multiple titles for the same keywords.

Remember to not only formulate titles to include keywords that you want to rank for, but also so that they influence a click-through when they appear in a SERP. If you’re appealing to a particular demographic or market (budget or luxury, for instance), make that relevance clear in the page title to attract attention.

Create <meta> descriptions for your main pages. The description isn’t used as a ranking signal, but it is displayed in SERPs and influences click-throughs.

In 160 characters or less, write a compelling summary of the page content that will entice the searcher to follow the link; longer descriptions will be truncated when displayed. Include target keywords in the description, which appear in bold in the SERPs if they match the query.

The page’s main content should principally be crafted for the user rather than search engines, but remember to use your target keywords where possible:

<strong> tags around one instance of the target keywords, and similarly for <em> tags. These are thought to add a very small amount of positive weight as a ranking signal.<h1> heading on a page doesn’t necessarily contribute strongly as a ranking signal, you should include target keywords in the heading where they feel natural, to match the expectations of users who searched for them and clicked through from a SERP.SEO aims to optimise organic search rankings and click-through rates from search engines results pages.

alt text and transcripts for media files.<meta> descriptions with target keywords for key pages to improve click-throughs.