This work is licensed under a Creative Commons Attribution 4.0 International License.

Testing: it’s one of those potentially dull and yet highly contentious topics. I’ll try to avoid the dull, but there may be some contention brewing on the horizon.

Most programmers work on software that others have created and that others will develop after them. Sharing the code creates a community in which the team of developers live and interact. Preserving code quality not only has a direct impact on how well a developer can do their job, it becomes a moral obligation to their colleagues in the community.

Add to this the responsibility to deliver quality to the paying customer, the direct relationship between app uptime and revenue, plus the difficulty of testing in a complex web ecosystem, and it’s easy to see why web developers have a love/hate relationship with testing.

Web app tests fall into five main categories:

All these tests add up to a lot of time, but do you really need them all? As usual, it depends. Let common sense prevail. If your app is used in a hospital to prescribe medication doses, or it’s a critical financial component in a large enterprise, don’t skimp on the tests. On the other hand, I suspect that most of you are building a spanking new web app that doesn’t impact human safety or hundreds of jobs. The critical thing to remember for new ideas is that your app will probably change.

If you’re following this book’s advice to build a minimum viable product, you will do the bare minimum necessary to get something out the door as quickly as possible to test the waters. Once you know more about your market, you can refine your app and try again, each time inching closer to a product that your customers want.

Focus your tests around the MVP process and apply the same minimal approach. Test what you need to ensure that your product is given a fair chance in the market, but don’t worry about scaling to 100,000 customers or about the long-term testability of your code: it is likely to change significantly in the first few iterations. Your biggest problems right now are identifying desirable features and getting people to use the app.

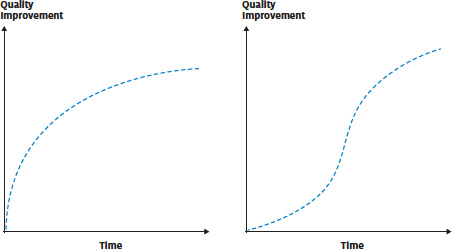

Some types of test require greater investment than others before they pay off.

Some tests yield improvements quickly (the left graph) whereas others require more investment before they start to pay off (the right graph). Be lazy and target the quick wins for your MVP. Invest in medium-term tests when your app finds a foothold in the market, and as your codebase undergoes less change between revisions.

The lazy version of functional testing is more accurately called system testing, which confirms that the app works as a whole but doesn’t validate individual functions of the code.

First, you create a simple test plan listing the primary and secondary app features and paths to test before each release. Next, you or your team should personally use the app to run through the test plan and check for major problems. Finally, ask some friends or beta testers to use the app and report any problems.

That’s it. Any bugs that aren’t discovered through regular activity can probably be disregarded for the time being. It’s not comprehensive or re-usable, but for an MVP app with minimal features it should cover the basics. When your app gains traction and the codebase begins to settle between iterations, it’s time to progress to a medium-term investment in functional testing: automated unit and interface tests that enable efficient regression tests of changes between versions – don’t worry, I’ll decipher what these mean in the next section.

A unit test is a piece of code designed to verify the correctness of an individual function (or unit) of the codebase. It does this through one or more assertions: statements of conditions and their expected results.

To test a function that calculates the season for a given date and location, a unit test assertion could be, “I expect the answer ‘summer’ for 16 July in New York”. A developer normally creates multiple test assertions for non-trivial functions, grouped into a single test case. In the previous example, additional assertions in the test case should check a variety of locations, dates and expected seasons.

Not all functions are as straightforward to test. Many rely on data from a database or interactions with other pieces of code, which makes them difficult to test in isolation. Solutions exist for these scenarios, such as mock objects and dependency injection, but they create a steep initial learning curve for those new to unit testing.

It also takes some investment to pay off. Even a simple web app can contain hundreds of individual functions. Not all require a unit test, but even if the initial tests are focused solely on functions that contain critical logic, a significant number may be required to catch all of the important bugs.

The effort does eventually pay off, however. Commonly stated benefits of unit testing include:

Some developers find unit testing so beneficial that they make it the initial scaffolding from which the app is developed. Under this test-driven development approach, each test is written prior to the functional code, to define the expectations of the function. When it is first run, the test should fail. The developer then writes the minimum amount of code necessary to satisfy the test. Confidence in the successful test enables the developer to iteratively re-factor improvements to the code.

Whether or not you decide to adopt test-driven development, hundreds of frameworks are available to ease your implementation of automated unit testing. The Wikipedia list1 is a great place to start.

Web app logic is shifting from the server to the browser. As your app progresses from a simple MVP to a more mature product, the interface code will become more elaborate, and manual tests more cumbersome.

Automated interface tests share the same assertion principle as unit tests: conditions are set and the results are checked for validity. In the case of interface tests, the conditions are established through a number of virtual mouse clicks, form interactions and keystrokes that simulate a user’s interaction with the app. The result is usually confirmed by checking a page element for a word, such as a success message following a form submission.

In terms of automated interface test frameworks, a sole developer or small team of developers who are intimately familiar with the interface code may prefer the strictly code-oriented approach of a tool such as Watir2. For larger teams or apps with particularly dynamic interfaces, the graphical test recording of Selenium3 may be better suited. Both tools support automated tests on multiple platforms and browsers.

Once you’ve started automated interface testing it’s easy to get sucked in, as you try to cover every permutation of user journey and data input. For the sake of practicality, it’s best to ignore business logic in the early days: tests for valid shipping and tax values are better served in unit tests. Instead, create tests for critical workflows like user registration and user login – verify paths through the interface rather than value-based logic.

Print designers and television companies enjoy a luxury unknown to web developers: the limits of their media. We must contend with the rapid proliferation of devices and software with differing capabilities, and must squeeze as much compatibility as we can out of our apps to attract and retain the largest possible audience.

The main causes of web app incompatibility are:

That seems like an awful lot to think about, but the extent to which the technical compatibility factors affect the success of your app will depend on your target market.

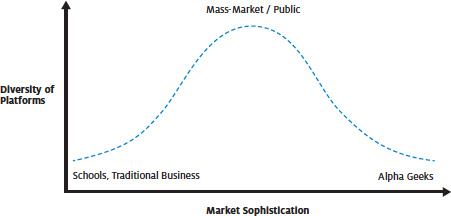

Markets with high and low technical sophistication tend to exhibit less diversity in web software and devices

If your app is targeted at web developers or other technology-savvy users (AKA geeks), you can presume that a majority will have recent web browser versions and sophisticated hardware. Alternatively, for an app designed for a traditional financial enterprise, you may be able to assume a majority of users with Microsoft operating systems and Internet Explorer. Only a mass-market app (Google, Facebook and the rest) must consider the widest possible variation in hardware, software and user capabilities from the get-go.

If you don’t trust your gut audience stereotypes to refine the range of your compatibility tests, use analytics data from your teaser page or MVP advertising campaign. Build a quantified profile of your target market and aim to provide compatibility for at least 90% of the users based on the largest share of browser/operating system/version permutations.

Worst case: if you can’t assume or acquire any personalised statistics, test compatibility for browsers listed in the Yahoo! A-grade browser support chart6.

It’s important to realise that not all market segments offer equal value. To use a sweeping generalisation as an example, you may find that Mac Safari users constitute a slightly smaller share of your visitors than Windows Firefox users, but they convert to paid customers at twice the rate. It’s important that you measure conversion as quickly as possible in your app lifecycle (you can start with users who sign up for email alerts on the teaser page) and prioritise compatibility tests accordingly.

You can overcome cross-browser inconsistencies if you take advantage of mature front-end JavaScript libraries, CSS frameworks and a CSS reset style sheet. Some additional problems may be resolved by validating your HTML and CSS; use the W3C online validator7 or install a browser validation plug-in to detect and correct mistakes.

To achieve accurate cross-browser compatibility you’ll need regular access to a variety of browsers, versions and operating systems. You may find that online services like Browsershots8 or Spoon9 suit your needs, but to regularly test dynamic web interfaces, nothing beats having a fast local install of the browser, either as a native installation or in a local virtual environment, such as VMWare10 or Parallels11. Microsoft handily makes virtual images of IE6, 7 and 8 available12. If you opt for virtualisation, upgrade your computer’s memory to appreciably improve performance.

Aiming for compatibility on all four major browsers, many developers favour a particular testing order:

Like cross-browser tests, accessibility tests are offered by a number of free online web services, (WAVE13, for example). Automatic tests can only detect a subset of the full spectrum of accessibility issues, but many of the better services behave as guided evaluations that walk you through the manual tests. For apps in development that aren’t live on the web, or for a faster test-fix-test workflow, you may prefer to use a browser plug-in, like the Firefox Accessibility Extension14.

Some of the most important accessibility issues15 to test include:

Web app responsiveness can be evaluated through performance tests, load tests and stress tests. For the sake of practicality, you may want to consider starting with simple performance tests and hold off on the more exhaustive load and stress tests until you’ve gained some customers. Luckily for us, scalable cloud hosting platforms enable us to be slightly lazy about performance optimisation.

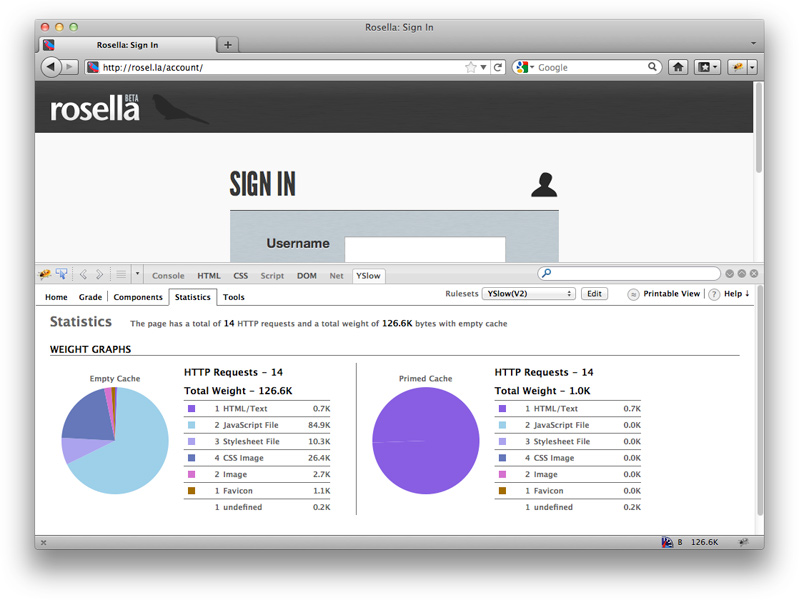

Performance tests measure typical response times for the app: how long do the key pages and actions take to load for a single user? This is an easy but essential test. You’ll first need to configure your database server and web application server with profilers to capture timing information, for example with Microsoft SQL Server Profiler (SQL Server), MySQL Slow Query Log (MySQL), dotTrace16 (.NET), or XDebug17 with Webgrind18 (PHP). You can then use the app, visiting the most important pages and performing the most common actions. The resultant profiler data will highlight major bottlenecks in the code, such as badly constructed SQL queries or inefficient functions. The Yahoo! YSlow19 profiler highlights similar problems in front-end code.

Of course, this isn’t an accurate indication of the final production performance, with only one or two people accessing the app on a local development server, but it’s valuable nonetheless. You can eliminate the most serious obstructions and establish a baseline response time, which can be used to configure a timed performance test as an automated unit or interface test.

Yahoo! YSlow

Load tests simulate the expected load on the app by automatically creating virtual users with concurrent requests to the app. Load is normally incremented up to the maximum expected value to identify the point at which the application becomes unresponsive.

For example, if an app is expected to serve 100 users simultaneously, the load test might begin at 10 users, each of whom make 500 requests to the app. The performance will be measured and recorded before increasing to 20 users, who each make 500 requests, and so forth.

New bottlenecks may appear in your web app profiling results that highlight a need for caching, better use of file locking, or other issues that didn’t surface in the simpler single-user performance test. Additionally, load tests can identify hard limits or problems with server resources, like memory, disk space and so on, so be sure to additionally profile your web server with something like top20 (Linux) or Performance Monitor21 (Windows).

Because load tests evaluate the server environment as well as the web app code, they should be run against the live production server(s) or representative development server(s) with similar configurations. As such, the response times will more accurately reflect what the user will experience. Which brings us to an interesting question: what is an acceptable response time?

It all depends on the value of the action. A user is more prepared to wait for a complex financial calculation that could save them hundreds of dollars than to wait for the second page of a news item to load. All things considered, you should aim to keep response times to less than one second22 to avoid interrupting the user’s flow.

Free load testing software packages include ApacheBench23, Siege24, httperf25, and the more graphical JMeter26 and The Grinder27.

A stress test evaluates the graceful recovery of an app when placed under abnormal conditions. To apply a stress test, deliberately remove resources from the environment or overwhelm the application while it is in use:

When the resources are reinstated the application should recover and serve visitors normally. More importantly, the forced fail should not cause any detrimental data corruption or data loss, which may include:

Because a stress test tackles infrequent edge cases, it is another task that you can choose to defer until your app begins to see some success, even if you do have to deal with the occasional consequence, such as manual refunds and cache re-builds.

The discouraging reality is that it’s impossible to be fully secure from all the varieties of attacks that can be launched against your app. To get the best security coverage, it is vital to test the security of your app at the lowest level possible. A single poor cryptography choice in the code may expose dozens of vulnerabilities in the user interface.

There really is no substitute for your team having sufficient knowledge of secure development practices (see chapter 18) at the start of the project. “An ounce of prevention is worth a pound of cure”, as the internet assures me Benjamin Franklin once said, albeit about firefighting rather than web app security.

The next best thing is to instigate manual code reviews. If you’re the sole developer, put time aside to review the code with the intention of checking only for potential security vulnerabilities. If you’re working in a team, schedule regular team or peer reviews of the code security and ensure that all developers are aware of the common attack vectors: unescaped input, unescaped output, weak cryptography, overly trusted cookies and HTTP headers, and so on.

If your app handles particularly sensitive information – financial, health or personal – you should consider paying for a security audit by an accredited security consultant as soon as you can afford to. Web app security changes by the week and you almost certainly don’t have the time to dedicate to the issue.

You should frequently run automated penetration tests, which are not a silver bullet but are useful for identifying obvious vulnerabilities. Many attackers are amateurs who rely on similar automated security test software to indiscriminately scattergun attack thousands of websites. By running the software first, you’re guarding against all but the most targeted of attacks against your app. Skipfish28, ratprox297 and the joyously named Burp Intruder30 are three such tools that can be used in conjunction with data from the attack pattern database fuzzdb31.

For a fuller understanding of web app security testing, put some time aside to read through the comprehensive OWASP Testing Guide32.

See chapter 15 for an in-depth look at usability testing.

In the early stages of your web app’s development you’ll probably manually copy files from your local development computer to your online server. While you have no customers and a simple single server, it’s more valuable to devote your time to iterations of your MVP features than to a sophisticated deployment process.

As the customer base, technical complexity and hosting requirements of your app grow, the inefficiency and fragility of manual SFTP sessions will quickly become apparent.

Automated deployment isn’t only about enforcing the quality of code upgrades and reducing downtime. A solid deployment tool gives you the confidence to push changes to your users more easily, speed up the feedback loop on new features, isolate bugs quicker, iterate faster and build a profitable product sooner.

The simplest form of automated deployment will script the replication of changed files from your local environment to the live server. You can do this through your version control software33 or rsync34, but eventually you’ll run into problems with choreographing file and database changes, differences in local/live configuration, and any number of other technical intricacies.

A better solution assumes that the app deployment has three parts: the local preparation (or build), the transfer of files, and the post-upload remote configuration.

A build is normally associated with the compilation of code into executable files, but the term can also apply to popular interpreted web languages that don’t require compilation, such as PHP or Ruby. For the purposes of deployment, the release build process normally includes:

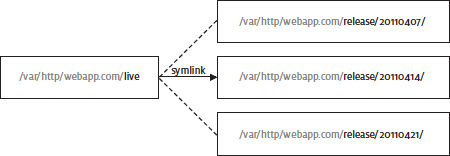

With the build prepared, the files can be transferred to one or more live servers with rsync or a similar utility. The files for each release should be copied to a new time-stamped directory, not directly over the live files.

In the final stage of the deployment, the equivalent of an install script is run on the server to switch from the current release to the newly uploaded release:

Use a symlink to quickly move your website between different versions

Automated build and deployment tools are readily available in most popular web languages: Jenkins36 and CruiseControl37 (Java), Phing38 (PHP) and Capistrano39 (Ruby) are among those frequently used. Note that except for familiarity, there’s no reason why your deployment tool has to be the same language as your web app. Just because your app is in PHP, it doesn’t mean you should rule out the excellent Jenkins or Capistrano tools from your process.

For an extra layer of confidence in your release process, you should incorporate an intermediate deployment to a staging server, where you test database migrations on a copy of the live database and perform manual acceptance tests. If your team uses your app internally, you can even make the staging server the primary version of the app that you use, so that you ‘dogfood’ the new candidate version for a couple of days before pushing it out to the live server.

How often you release an update will depend on a number of factors: how rapidly you develop features; how much manual testing is required; how much time you have available for testing; and how easy or automated the deployment process is.

You should schedule releases so that you don’t build up a long backlog of changes. Each change adds risk to the release, and feedback is easier to measure when new features are released independently. Some companies like Etsy make dozens of releases a day40, but this continuous deployment approach relies on a serious investment in deployment automation and comprehensive automated test coverage. A more reasonable schedule, as adopted by Facebook and others, is to aim for a release once a week.

Tests and deployment options come in many shapes and sizes; start with critical checks to your core features and gradually expand your test infrastructure as your app features stabilise and your user base grows.